Convolution Neural Network from Scratch

This is a 2 part series. In this part we will understand the forward pass in a Convolution neural network .(Avoiding detailed explanation as there are comments in code).

Image credit: [sourced from google]

Image credit: [sourced from google]

Import Libraries & Data

import numpy as np

import matplotlib.pyplot as plt

import skimage.data

# Reading the image

img = skimage.data.chelsea()

# Converting the image into gray.

#img = skimage.color.rgb2gray(img)

print(img.shape)

plt.imshow(img)

(300, 451, 3)

<matplotlib.image.AxesImage at 0x1f452f43088>

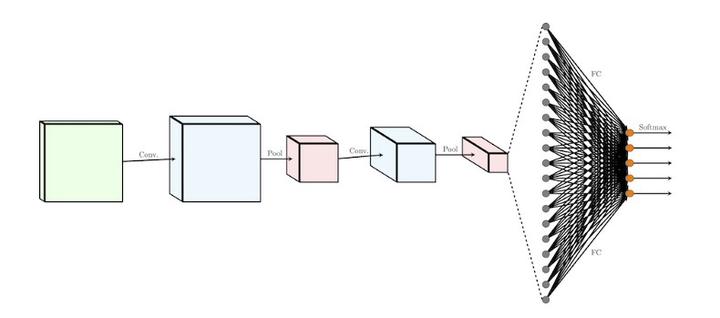

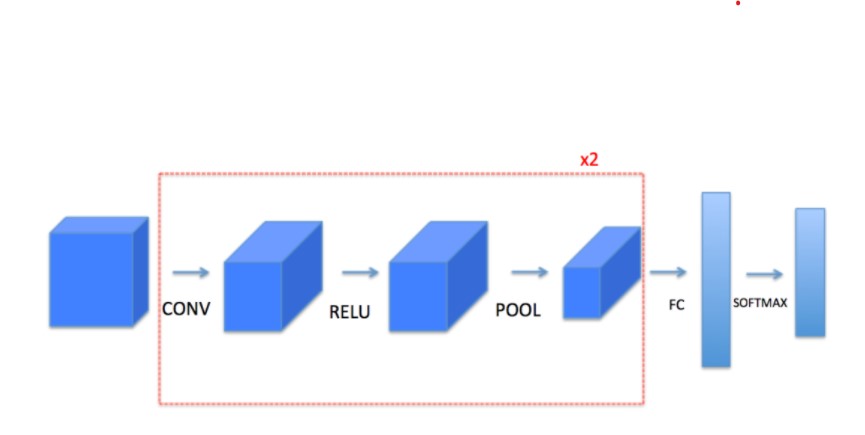

Architecture

Funcions

- zero padding

- colvolution operation

- forward pass in convolution

- pooling operation forward

- backward pass in colvolution

- pooling operation backward

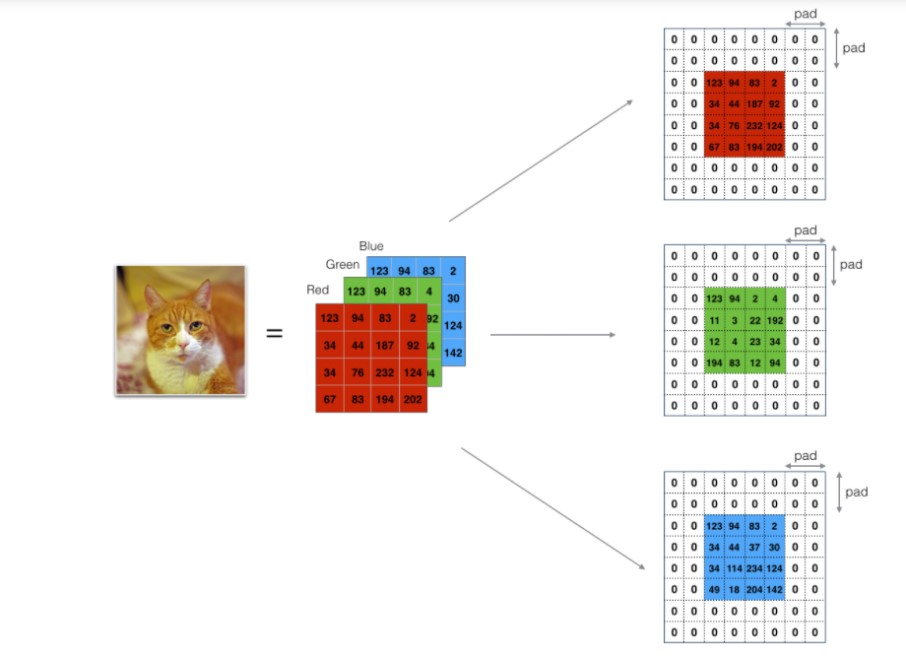

Zero padding

def zero_pad(X, pad):

"""

Pad with zeros all images of the dataset X. The padding is applied to the height and width of an image,

as illustrated in Figure 1.

Argument:

X -- python numpy array of shape (m, n_H, n_W, n_C) representing a batch of m images

pad -- integer, amount of padding around each image on vertical and horizontal dimensions

Returns:

X_pad -- padded image of shape (m, n_H + 2*pad, n_W + 2*pad, n_C)

"""

X_pad= np.pad(X,((0,0),(pad,pad),(pad,pad),(0,0)),mode="constant",constant_values=(0,0))

return X_pad

x=np.expand_dims(img, axis=0)

x_pad=zero_pad(x,20)

print(x.shape,x_pad.shape)

fig,axs=plt.subplots(1,2)

axs[0].imshow(x[0,:,:,:])

axs[0].set_title('X')

axs[1].imshow(x_pad[0,:,:,:])

axs[1].set_title('X_pad')

(1, 300, 451, 3) (1, 340, 491, 3)

Text(0.5, 1.0, 'X_pad')

Convolution operation

def conv_single_step(a_slice_prev, W, b):

"""

Apply one filter defined by parameters W on a single slice (a_slice_prev) of the output activation

of the previous layer.

Arguments:

a_slice_prev -- slice of input data of shape (f, f, n_C_prev)

W -- Weight parameters contained in a window - matrix of shape (f, f, n_C_prev)

b -- Bias parameters contained in a window - matrix of shape (1, 1, 1)

Returns:

Z -- a scalar value, the result of convolving the sliding window (W, b) on a slice x of the input data

"""

s=np.multiply(a_slice_prev,W)

Z=np.sum(s)

Z=Z+np.float(b)

return Z

l1_filter=np.zeros((3,3,3))

l1_filter[:, :,0] = np.array([[[-1, 0, 1],

[-1, 0, 1],

[-1, 0, 1]]])

l1_filter[:, :, 1] = np.array([[[1, 1, 1],

[0, 0, 0],

[-1, -1, -1]]])

l1_filter[:, :, 2] = np.array([[[1, 1, 1],

[0, 0, 0],

[-1, -1, -1]]])

#for a slice

np.random.seed(1)

a_slice_prev = img[20:23,20:23,:]

plt.imshow(img[20:23,20:23,:])

W = l1_filter

b = np.random.randn(1, 1, 1)

Z = conv_single_step(a_slice_prev, W, b)

print("Z =", Z)

Z = -19.37565463633676

Convolution forward operation

def conv_forward(A_prev, W, b, hparameters):

"""

Implements the forward propagation for a convolution function

Arguments:

A_prev -- output activations of the previous layer,

numpy array of shape (m, n_H_prev, n_W_prev, n_C_prev)

W -- Weights, numpy array of shape (f, f, n_C_prev, n_C)

b -- Biases, numpy array of shape (1, 1, 1, n_C)

hparameters -- python dictionary containing "stride" and "pad"

Returns:

Z -- conv output, numpy array of shape (m, n_H, n_W, n_C)

cache -- cache of values needed for the conv_backward() function

"""

m, n_H_prev, n_W_prev, n_C_prev =A_prev.shape

f, f, n_C_prev, n_C =W.shape

stride=hparameters["stride"]

pad =hparameters["pad"]

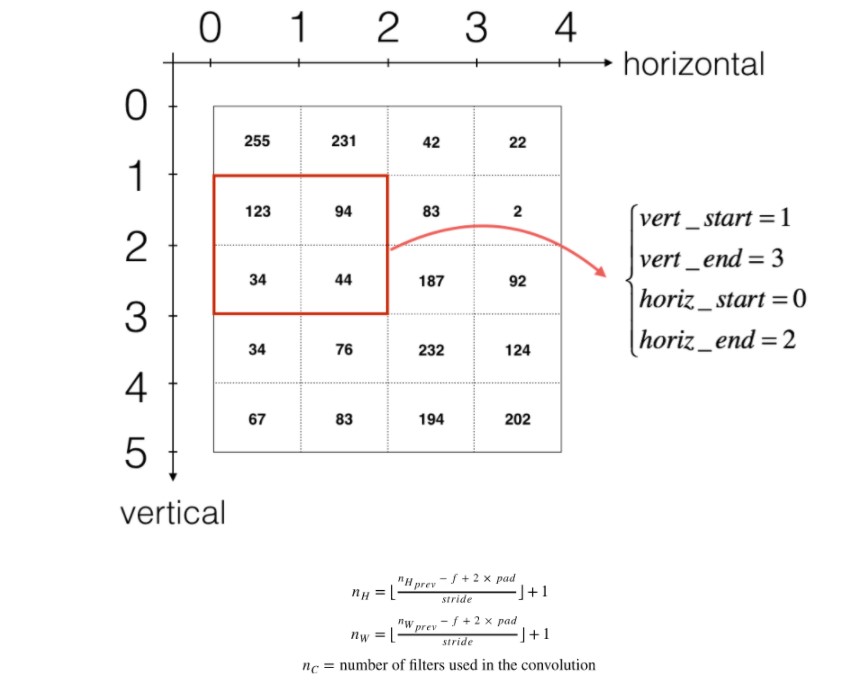

#step 1 calculate final n_H , n_w post convolution operation

n_H = 1+int((n_H_prev+2*pad-f)/stride)

n_W = 1+int((n_W_prev+2*pad-f)/stride)

#step 2 pad the image

A_prev_pad=zero_pad(A_prev, pad)

# Initialize the output volume Z with zeros. (≈1 line)

Z = np.zeros((m,n_H,n_W,n_C))

A=np.zeros((m,n_H,n_W,n_C))

#step 3 generate sub parts of image for convolution operation for all m training examples

for i in range(m):

a_prev_pad=A_prev_pad[i,:,:,:] #get the ith image from m images

for h in range(int(n_H)): #loop over vertical axis of image

vert_start = h*stride

vert_end = vert_start+f

for w in range(int(n_W)):# loop over horizontal axis

horiz_start = w*stride

horiz_end = horiz_start+f

for c in range(n_C):# loop over the new no of channels(#filters)

#for all the channel in previous image

a_slice_prev = a_prev_pad[vert_start:vert_end,horiz_start:horiz_end,0:n_C_prev]

weights = W[:,:,:,c] #get the value of filter and bias for a particular filter

bias =b[:,:,:,c]

Z[i,h,w,c]=conv_single_step(a_slice_prev,weights,bias)

A[i,h,w,c] =np.where(Z[i,h,w,c]>0,Z[i,h,w,c],0)

assert(Z.shape==(m,n_H,n_W,n_C))

cache=(A_prev,W,b,hparameters)

return A,cache

#for the entire image

filter_stack = np.zeros((3,3,3,3))

for i in range(3):

filter_stack[:,:,:,i]=l1_filter

filter_stack

A_prev = np.expand_dims(img, axis=0) #10examples, each image of height=5,width=7,no_of channels =4

W = filter_stack#filter of height=3,width=3,no_of channel is same as iamge=4,no of filters=3

b = np.random.randn(1,1,1,3)#bias of size h=1,w=1,depht/no of channel=1,for each filter so 8

hparameters = {"pad" : 1,

"stride": 2}

A, cache_conv = conv_forward(A_prev, W, b, hparameters)

plt.imshow(Z[0,:,:,:])

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

<matplotlib.image.AxesImage at 0x1f4536ad1c8>

CONV layer should also contain an activation, in which case we would add the following line of code:

- Convolve the window to get back one output neuron Z[i, h, w, c] = …

- Apply activation A[i, h, w, c] = activation(Z[i, h, w, c])

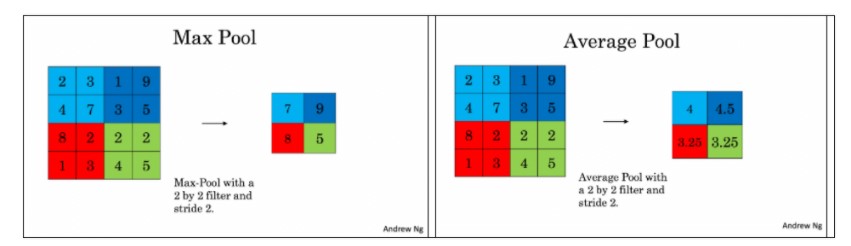

Pooling forward

def pool_forward(A_prev, hparameters, mode = "max"):

"""

Implements the forward pass of the pooling layer

Arguments:

A_prev -- Input data, numpy array of shape (m, n_H_prev, n_W_prev, n_C_prev)

hparameters -- python dictionary containing "f" and "stride"

mode -- the pooling mode you would like to use, defined as a string ("max" or "average")

Returns:

A -- output of the pool layer, a numpy array of shape (m, n_H, n_W, n_C)

cache -- cache used in the backward pass of the pooling layer, contains the input and hparameters

"""

m, n_H_prev, n_W_prev, n_C_prev = A_prev.shape

stride=hparameters["stride"]

f =hparameters["f"]

#step 1 calculate final n_H , n_w post convolution operation

n_H = int(1 + (n_H_prev - f) / stride)

n_W = int(1 + (n_W_prev - f) / stride)

n_C = n_C_prev

# Initialize the output volume Z with zeros. (≈1 line)

A = np.zeros((m,n_H,n_W,n_C))

#step 3 generate sub parts of image for convolution operation for all m training examples

for i in range(m):

a_prev=A_prev[i,:,:,:] #get the ith image from m images

for h in range(int(n_H)): #loop over vertical axis of image

vert_start = h*stride

vert_end = vert_start+f

for w in range(int(n_W)):# loop over horizontal axis

horiz_start = w*stride

horiz_end = horiz_start+f

for c in range(n_C):# loop over the new no of channels(#filters)

#for all the channel in previous image

a_slice_prev = a_prev[vert_start:vert_end,horiz_start:horiz_end,0:n_C_prev]

# Compute the pooling operation on the slice.

# Use an if statement to differentiate the modes.

# Use np.max and np.mean.

if mode == "max":

A[i, h, w, c] = np.max(a_slice_prev )

elif mode == "average":

A[i, h, w, c] = np.mean(a_slice_prev )

assert(A.shape==(m,n_H,n_W,n_C))

cache=(A_prev,hparameters)

return A,cache

#stride of 1

np.random.seed(1)

A_prev = A

hparameters = {"stride" : 1, "f": 3}

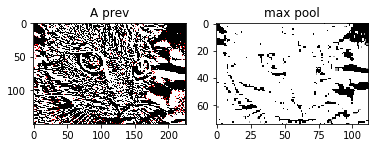

fig,axs=plt.subplots(1,2)

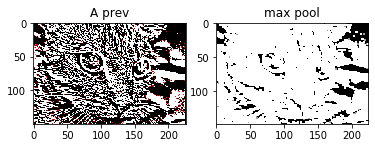

A, cache = pool_forward(A_prev, hparameters)

print("mode = max")

#print("A.shape = " + str(A.shape))

#print("A =\n", A)

print()

print(A.shape)

axs[0].imshow(A_prev[0,:,:,:])

axs[0].set_title('A prev')

axs[1].imshow(A[0,:,:,:])

axs[1].set_title('max pool')

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

mode = max

(1, 148, 224, 3)

Text(0.5, 1.0, 'max pool')

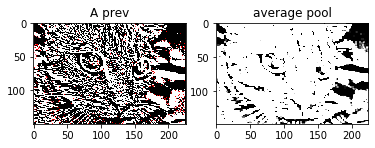

A, cache = pool_forward(A_prev, hparameters, mode = "average")

print("mode = average")

#print("A.shape = " + str(A.shape))

#print("A =\n", A)

fig,axs=plt.subplots(1,2)

axs[0].imshow(A_prev[0,:,:,:])

axs[0].set_title('A prev')

axs[1].imshow(A[0,:,:,:])

axs[1].set_title('average pool')

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

mode = average

Text(0.5, 1.0, 'average pool')

#stride of 2

np.random.seed(1)

hparameters = {"stride" : 2, "f": 3}

fig,axs=plt.subplots(1,2)

A, cache = pool_forward(A_prev, hparameters)

print("mode = max")

#print("A.shape = " + str(A.shape))

#print("A =\n", A)

print()

axs[0].imshow(A_prev[0,:,:,:])

axs[0].set_title('A prev')

axs[1].imshow(A[0,:,:,:])

axs[1].set_title('max pool')

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

mode = max

Text(0.5, 1.0, 'max pool')

Put it all together (CNN)

we will see this in the second part of the post .